Editorial: I was falsely accused of using ChatGPT — and you might be too

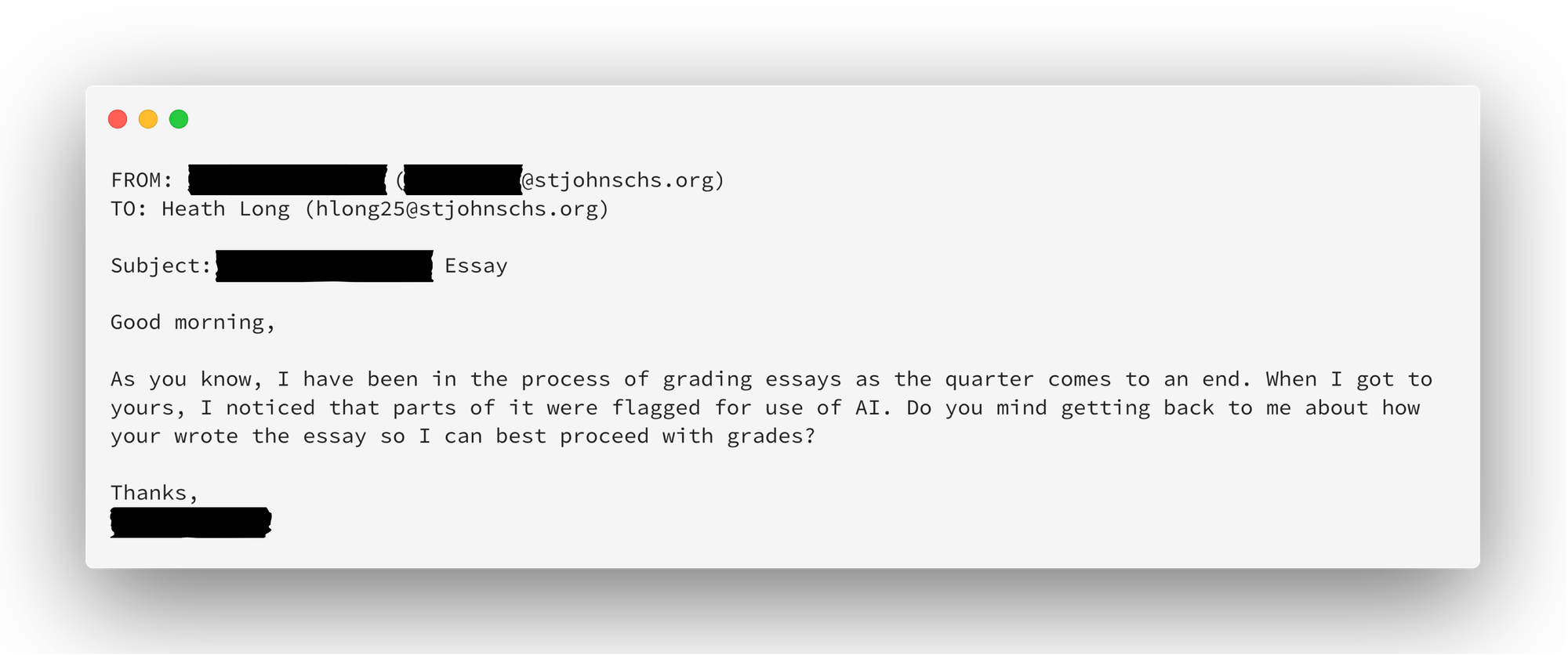

On Wednesday, October 25, 2024, I received an email that shook me to the core, unwillingly thrusting me into a battle to defend my own innocence and prove my integrity. It made me feel as if I was in Franz Kafka’s The Trial, accused of a crime, but never shown any evidence of my guilt, hopelessly awaiting my sentence. I was put on the stand against an automated system that determined me to be guilty, with almost no option of appeal. Here’s what happened, the flaws and inaccuracies of these tools, and how to defend yourself.

When I turned in my essay, I didn’t realize that I was unknowingly subjecting myself to accusations and interrogation. However, when the email came back, it was clear what had happened: my professor had put my essay through Turnitin, a plagiarism and AI detector, and it had flagged my essay as partially AI generated. The moment I read it, my stomach dropped. How was I meant to prove my own humanity?

Quickly, I scoured through my paper to find anything that would absolve me of guilt. The word processor I used, LibreOffice, didn’t store version history. I didn’t record myself writing the essay. I didn’t even ask for help from anyone, so I had no one to vouch for me. The only thing I had was my list of sources in Noodle Tools. It was my word against the detector, in a twisted version of Man vs. Machine.

Thoughts raced through my head, sensing the imminent doom of a false judgement. Would I get a zero? Would I be suspended? Expelled? As these potential situations clogged my mind, I began to panic, feeling myself fall into an anxiety attack. The world seemed to spin around me and my vision became foggy, as the stress consumed me.

After I leveled my head, I reasoned the only way I could save myself from the forever alteration of my life and the smear on my reputation was to prove that Turnitin was wrong; to prove that these tools were unreliable and inaccurate. With renewed passion, I began to collect data that proved my hypothesis. I was essentially forced to write a second essay, one that would defend my first.

I never finished that essay. Instead, my teacher confronted me and I learned a valuable lesson in the benefit of having a good relationship with your teacher and being an engaged and dedicated student. I explained my case, showing my citations in Noodle Tools and preliminary sources I had gathered about Turnitin’s detection. I expected to be scolded, as a disapproving face met mine, telling me that my fate had already been determined. Instead, they looked back at me with understanding, hearing my situation and dropping all accusations, explaining that only the conclusion of my essay was flagged as partially artificially generated. A breath of air released from my mouth as I felt relief wash over.

However, the situation could have been much worse. If my teacher hadn’t been so understanding, if they trusted Turnitin more, or if I didn’t have my sources, it could’ve led to me sitting in front of an academic board, facing the principal despite my innocence.

Digital McCarthyism

Turnitin has been a prominent figure in the war against academic dishonesty. Their plagiarism detection system, released in the late 90s, is highly effective and has been used by schools for over two decades. It's this reputation that makes it even more dangerous.

Tools like Turnitin promise teachers instant and accurate plagiarism and generative content detection.[1] They guarantee low false positives and include phrases like “Our advanced AI writing detection technology is highly reliable and proficient in distinguishing between AI- and human-written text and is specialized for student writing,” on their website, which evokes a high level of trust from educators, despite the technology being largely untested and rapidly evolving.[2]

As concerns rise over generative AI being used by students[3], teachers have increasingly been looking towards tools to combat the rise of Large Language Models (LLMs).

“Nearly two-thirds [of teachers surveyed] (64.7%) say they are “highly concerned” or “somewhat concerned” about the increasing use of these tools in the classroom.” - aiEDU Pulse Survey

School LMS (learning management system) platforms, like Canvas, give teachers the ability to easily and automatically plug essays into tools like Turnitin, beginning the digital crusade against students moments after their submission, leading to a phenomenon I’ve coined Digital McCarthyism.

These tools consistantly fail to meet the accuracy rates they promise. For example, Turnitin promises a less than 1% false positive rate.[1] In reality, a study done by Temple University showed only an 77% accuracy rate of detecting AI[3] while also having only having a 93% chance of correctly indentifying human text.[4]

While a 3.5% false positive rate might seem low, scaling that up to the school shows the frightening potential consequences. St. John's has around 1,300 students actively enrolled. If every student turned in an entirely human written essay, Turnitin would incorrectly flag 45.5 students’ essays as AI generated. On average, each English class has six essays, working out to 273 false positives a year. Over a four-year normal high school education, where English is required for all four years, 1,092 students will be falsely accused, meaning statically, 84% of all students will receive a false positive.

On hybrid texts, Turnitin was worse than a coin flip, at only 43% accuracy.[5] Additionally, a study by the University of Maryland showed that it is trivially easy to evade AI detection without significantly diminishing the quality of the text by using recursive paraphrasers, which alter and reform the text, allowing them to evade watermark and zero-shot detections at a much higher rate.[6] As if it couldn't get worse, detectors are shown to be biased against non-native English speakers, falsely flagging at much higher rates.[7]

I don’t blame teachers nor administrators for buying into this technology. Marketing around it is incredibly deceptive, and it would be unreasonable to expect that all educators understand and are up-to-date with the latest advancements in Artificial Intelligence, LLMs, and detection software.

Turnitin uses this deception to make money at the expense of students.[8][9][10][11] Teachers are presented with an arbitrary percentage of text generated with AI instead of a confidence rating. No matter how many warning labels or disclaimers Turnitin adds, this presentation opens the floodgate for teachers, who aren’t aware of the system’s flaws, to compel students to defend themselves.

As of September 20, 55 institutes of higher education, including Yale, UMD, RIT, Michigan State, and UC Berkley have since banned, advised against and stopped providing, or disabled their AI detection, citing accuracy tests and expressing concern about the potential consequences of false positives.[12]

“We do not believe that AI detection software is an effective tool that should be used.” — Vanderbilt University

Student Defense

With the frequency of false accusations, it is more important than ever to take proactive steps to shield yourself and prove your innocence.

Proactive Steps

Firstly, reduce your chances of being falsely flagged by electing not to use any software that utilizes a LLM. This includes spelling and grammar checkers that make use of AI, such as Grammarly.

Secondly, use a word processor that records your activity. Make sure you have a clear log of your typing. Google Docs and Microsoft Word automatically record version history, and other editors often have options to enable it.

Lastly, submit any drafts. If you wrote a rough draft for your essay, it might be advantageous to include it in your submission. Make sure it's clearly marked as such and separate from your final draft to avoid it mistakenly being graded.

Sadly, none of these methods are foolproof in proving your innocence, as they can be spoofed. If you're really worried about being falsely accused, the only verifiable way to defend yourself is to fully film yourself as you write your entire essay, with you, your devices, and your essay in full clear view.

Retroactive Steps

If you have been falsely accused of unapproved usage of AI tools, refute the claim calmly and politely. Attach this article along with your response and any evidence you have of your innocence. Keep in mind, your fate is in your teacher’s hands, so be very cautious and don’t levy accusations or blame against them.

Conclusion

As of September 28, 2024, St. John's still employs Turnitin, a nearly two-decade-old platform, for AI and plagiarism checking. However, it's hard to say that Turnitin's AI detector, which has been out for a fraction of that time, has undergone adequate testing, or that it keeps up with the rapid development and change in the LLM field. Until this system improves, teachers should re-evaluate their relationship with this technology, otherwise more innocent students will be caught in the crossfire in the war against AI.

[1] - “For documents with over 20% of AI writing, our document false positive rate is less than 1% as further validated by a recent 800,000 pre-GPT document test.”

[2] - “Natural language processing (NLP) has significantly transformed in the last decade, especially in the field of language modeling. Large language models (LLMs) have achieved SOTA performances on natural language understanding (NLU) and natural language generation (NLG) tasks by learning language representation in self-supervised ways.”

[3] - “Turnitin correctly identified 23 of 30 samples in this category as being 100% AI generated, or 77%. Five samples were rated as partially (52-97%) AI-generated. Two samples were not able to be rated.”

[4] - “Turnitin correctly identified 28 of 30 samples in this category, or 93%. One sample was rated incorrectly as 11% AI-generated[8], and another sample was not able to be rated.”

[5] - “Determining the correctness of Turnitin’s scores on the hybrid texts proved to be a challenging exercise. Technically, since the texts were all partly human-written and partly AI-generated, a “correct” score could be considered to be any score between 1-99% (in other words, not 0% and not 100%.) By that metric, Turnitin correctly identified 13 of 30 hybrid texts as being neither fully human-written nor fully-AI generated, or 43%. Of the remaining texts, 6 were identified as 100% AI and 7 were identified as 100% human-written. One text was unable to be rated.”

[6] - “In particular, we develop a strong attack called recursive paraphrasing that can break recently proposed watermarking and retrieval-based detectors. Using perplexity score computation as well as conducting various MTurk human study, we observe that our recursive paraphrasing only degrades text quality slightly. We also show that adversaries can spoof these detectors to increase their type-I errors.”

[7] - “This study reveals a notable bias in GPT detectors against non-native English writers, as evidenced by the high misclassification rate of non-native-authored TOEFL essays, in stark contrast to the near zero misclassification rate of college essays, which are presumably authored by native speakers.”

[8] - Turnitin was acquired in 2019 for $1.57 million. They also acquired Ouriginal, a competing software company. They are not publicly traded, so while their exact revenue isn't available, their aquisitions demonstrate a high evaluation and seed funding.

[9] - “UC Davis student Louise Stivers became the victim of her college's attempts to root out essays and exams completed by chat bots.”

[10] - “When William Quarterman was accused of submitting an AI-generated midterm essay, he started having panic attacks. He says his professor should have handled the situation differently.”

[11] - “Policy demands that I refer essays with high AI detection scores for academic misconduct, something that can lead to steep penalties, including expulsion. But my standout student contested the referral, claiming university-approved support software they used for spelling and grammar included limited generative AI capabilities that had been mistaken for ChatGPT.”

[12] - “This list highlights schools that have either banned or discontinued the use of AI detection tools.”